ChatGPT's determinism from a technical, practical and philosophical view

In this article, I want to provide a holistic view about generative AI models and applications. I want to give an abstract notion of what LLMs are, some technical details about how applications like ChatGPT can infuse them with additional capabilities, how developers cope with the technical limitations and what people should consider when using LLMs for purposes of expressing themselves.

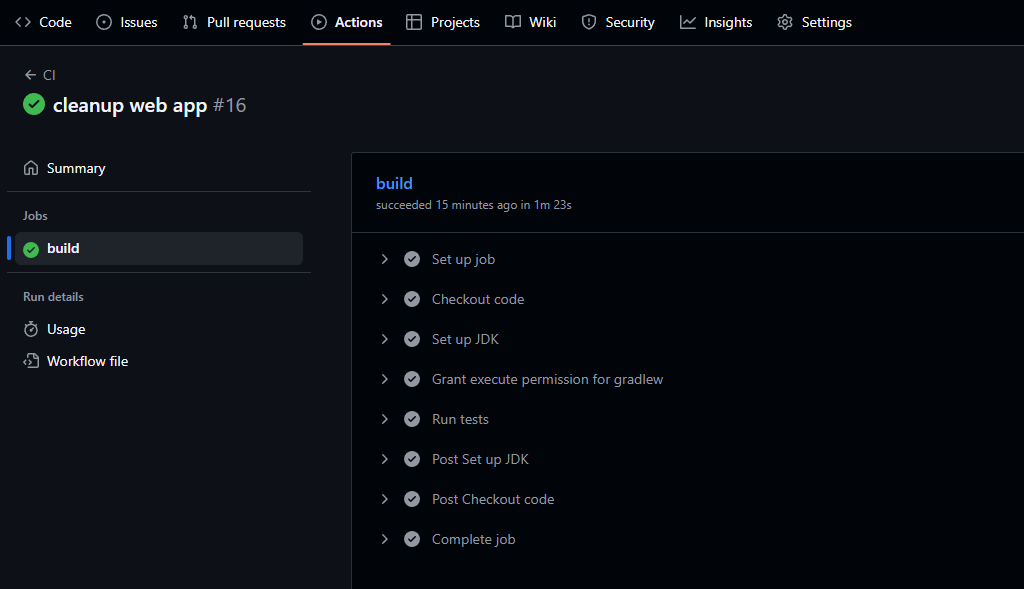

input -> output

What is an input-output map?

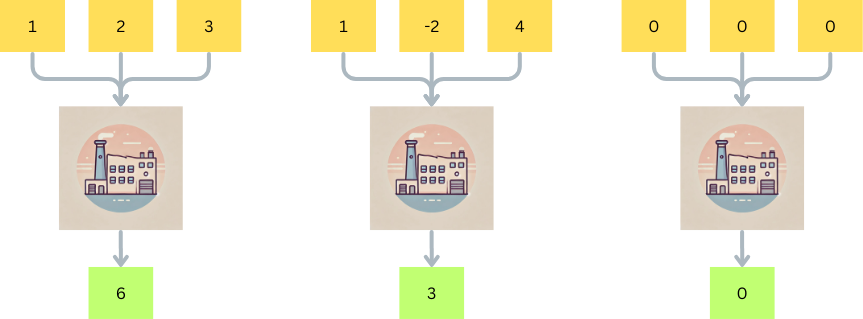

Imagine a simple function that turns some inputs into outputs. Take this function:

fn calc(int, int, int) => intIt takes three integer parameters and also returns an integer. We don't know yet what it's supposed to calculate, but let's looks at some examples:

At this point, it looks like a straight forward addition function that anyone can implement. If we look "inside the box" we will probably find something along the lines of return a + b + c

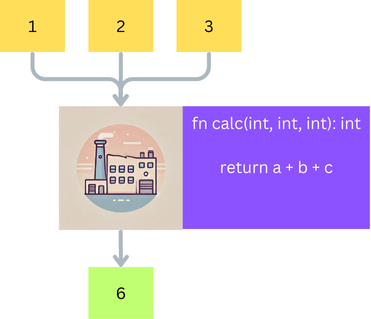

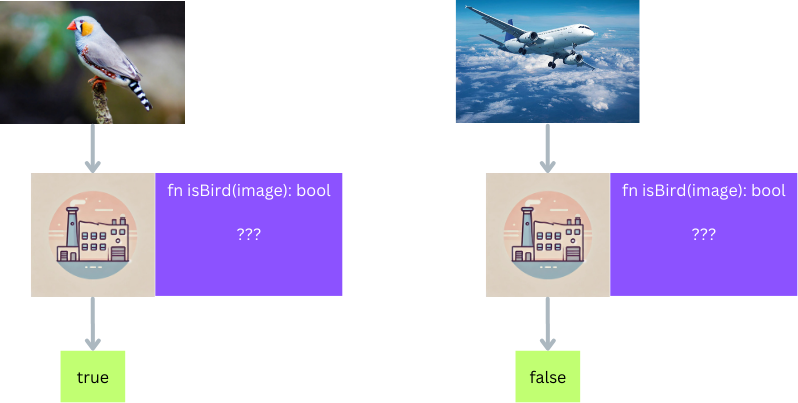

Image Classification

Now let's take a more complex function fn isBird(image) => boolean:

How would you implement this function, to decide whether a given image shows a bird? How many variables and sub-functions would you need?

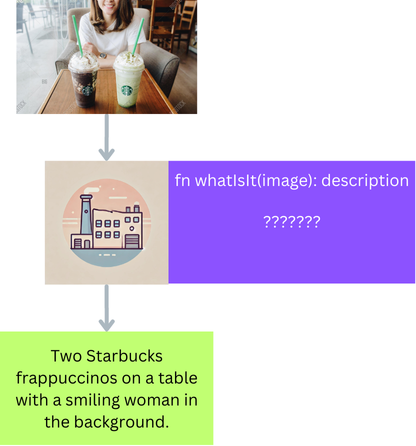

And to take it one step further, we have a function whatIsIt(image): description that takes an image as input and returns a description of it.

At this point we are absolutely hopeless trying to come up with a manual algorithm, and yet, using machine learning techniques, we can produce a function that does the job (with some level of confidence) and can be called by traditional programs. Whenever you read something like "this model has 7 billion parameters", you can roughly translate this to "this model includes 7 billion variables in its decision". You could imagine it like a glorified, multi-dimensional Plinko board, except it is built in such a way that it steers inputs with a certain shape to come out at exactly the right spot at the other end:

The interesting part is, although we can use these models to solve tasks; when we look inside the box, all we see is this seemingly chaotic, incomprehensible network of billions of multipliers.

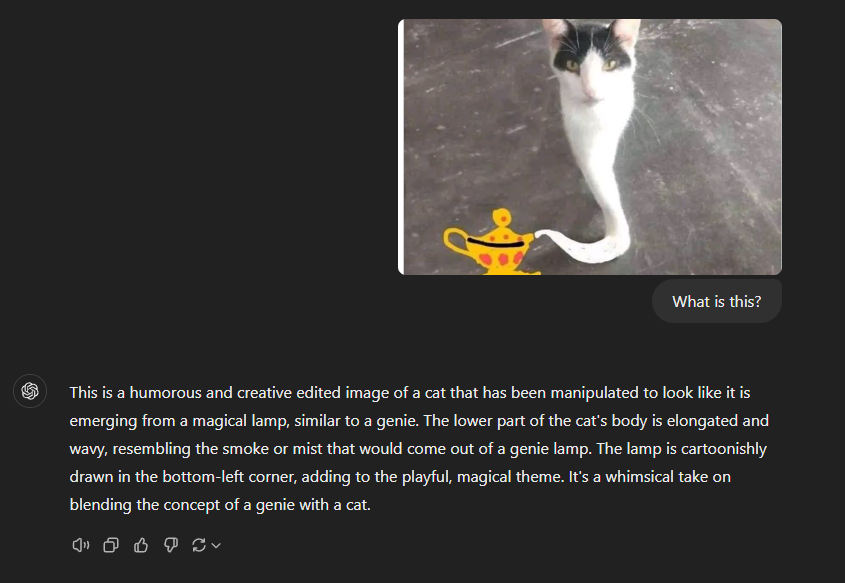

Image to Text

You can try this in ChatGPT, by pulling an image into the chat and ask it to describe it:

Evidently, OpenAI's whatIsIt(image) function is quite advanced.

We do not know how it is implemented. All we know is that is trained with a reverse-engineering process where train a huge network of vectors via reinforcement learning, by showing examples of A => B. So we just describe what the behavior of a function should be, but not how to implement it. After the training phase is done, the mapping is fixed. And then we will see if the model has abstracted concepts from the input/output pairs it was shown, so that it is able to handle cases it has never seen before.

Text to image

Here is a prompt, fed into a state of the art model called FLUX Ultra, priding itself with its natural language processing and prompt adherence:

A Spartan warrior in bronze armor, wearing sleek black ice skates, stands on a frozen battlefield under a twilight sky. He holds an ancient hockey stick, its blade glowing faintly blue from frost. His red cape flows dramatically in the icy wind. Behind him, there are more spartans in similar outfit in the distance. A majestic mythical creature flies accross the sky, leaving behind a golden trail. The photo is taken by a professional.

Aspect ratio: 16/9, seed: 1273084507

The same prompt with seed 1273084509 results in:

I want to point out here that given the same prompt and same seed, given a particular model (i.e. set of weights), the output is deterministic and you can generate exactly the same image with the info provided above. Usually though, we use random seeds to generate other variations of the same image, where the seed determines an image of random noise, at which the model recursively "squints its eyes" and makes it look a little bit "less noisy", with the text description in mind.

In the text to image world, there are already thousands of different models, so you always see something fresh and exciting pooping up. In the text to text world though, there is only about a handful of big players (GPT-4o, GPT-o1, Claude 3, Gemini) that the vast majority of users use on a daily basis.

Text examples

I gave ChatGPT (using the 4o model) the following prompt:

Give me the next word:

I am secretlyI let it regenerate its response 10 times and got these results:

plotting.

crafting

... hoping.

...yearning.

planning.

crafting

planning.

...planning.

plotting.

I am secretly plotting.So there is about a 60% chance to get plotting or planning for the input Give me the next word: I am secretly with no further context.

While there is some variation here, I think if I had asked 10 humans this question I would have gotten more varied results.

Let's take another example:

I am writing a fantasy novel with romance.

Please give me a name for the attractive female lead.

Respond only with the name.Responses:

Seraphina

Elaris

Selene Alaric

Selena Thorne

Selene Ravenshadow

Selene Ravenshadow

Lyra Valemere

Lyra Evershade

Seraphina Ravenshadow

Seraphina ValePrediction #1: We will see a LOT of characters namedSeraphina,SeleneandLyrain the upcoming years.

"Ok", you may think. "Let's change the prompt so that it chooses more unique names, and provide a blacklist."

Prompt:

I am writing a fantasy novel with romance.

Please give me a name for the attractive female lead.

Make sure the name is very unique.

Excluded names: Seraphina, Selene, Lyra

Respond only with the name.First 10 Responses:

Elowen

Aurevielle

Amariel

Elyndra

Eryndra

Eryndra

Aeloria

Eryndra

Aurelienne

ElowenPrediction #2: We will see a lot of characters namedEryndra,ElowenandAure*ie**ein the upcoming years.

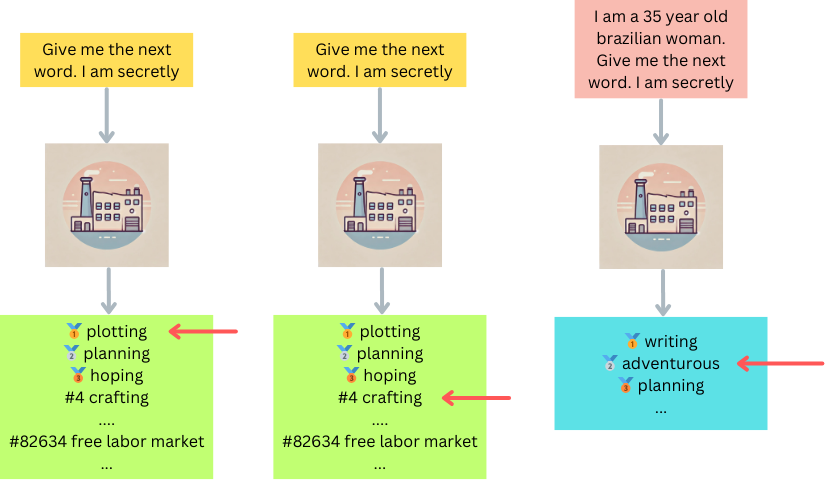

How come there are different results?

The above examples produced different (although repetitive) outputs given the same input.

Behind the scenes, the function does not only return the best next word, but all the words in ranked order. Given the same input, and the same model, this list always looks the same. There is a clear 1st, 2nd, 3rd place and so on. However, you can pick e.g. from the top 10 randomly, to create more variance.

In order to achieve variance, LLMs also have an inbuilt temperature parameter. This parameter, if greater than 0, will cause a result potentially further down in the list to be picked at random. So usually, we may get a word that is ranking in the top 10 best continuations, give or take. This process is repeated for every word, and each word becomes part of the input, influencing the next output (which can again be randomly picked from the top 50), so you can get lots of different combinations quickly.

However, if you set the temperature too high, e.g. allowing to select from the top 50000, the output quickly descends into unintelligible gibberish. This means: We cannot deviate too much from the model's personality (i.e. its rankings) or it loses its coherence.

Practical Implications

From the ideas I have outlined so far, I am offering the following conclusions:

- LLMs are relatively good at solving problems that would otherwise require impossibly complex algorithms. They are useful because they can (at least sometimes) provide the desired output for a given input.

- LLMs are inherently deterministic. They can sprinkle in randomness to select a top-N word instead of top-1, however the sheer act of ranking all possible words in response to an inputs is a subjective process. Providing the same inputs*, you will get remarkably similar outputs, that always reflect the personality (i.e. particular weights between parameters) of whichever LLM you are currently using

- Adding modifiers like "be more creative" will lead to different results, but only in the sense that you created an entirely new input by adding this modifier. However, it will lead to the same results that others would receive when using the same modifiers.

- This similarity of outputs spans not only choice of words, but also concepts of how a story should work, how characters develop, how to make a point, tone, and every other aspect of writing.

Diving deeper into Context / Input

I want to elaborate this concept of same input -> same output further. But first, I want to deal with a grave misconception. Some people say "ChatGPT is just a next word predictor. It does not understand anything."

While the first part is true, we have to acknowledge that an LLM does not keep a simple list of "which word comes after which", as in

an -> apple

the -> house

I love -> biscuitsInstead, the output is determined based on the entire input, also known as context. The context is made up of three things:

- The system prompt (instructions that remain constant throughout the conversation)

- (Parts of) the message history

- Your current prompt

Large requests for every prompt

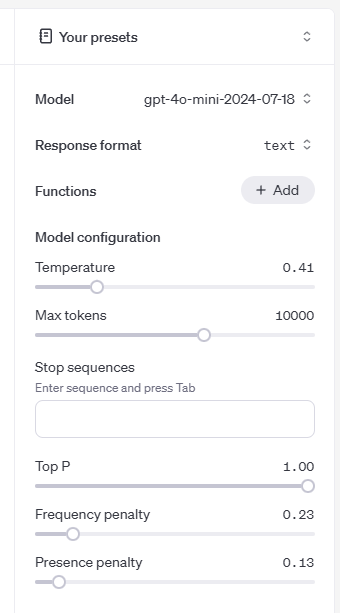

Even though they are listed as three separate parts here, in reality the LLM simply receives one context parameter. In the middle of a conversation, every single prompt you send will actually result in a request like this:

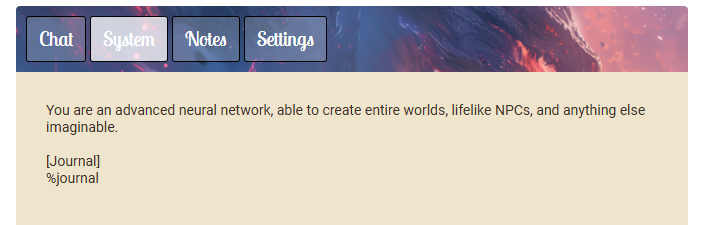

(System prompt) You are an advanced neural network, able to create entire worlds, lifelike NPCs, and anything else imaginable.

User: Hi

Assistant: Hello! How can I assist you today?

User: Let's create a role-playing experience!

Assistant: That sounds like a lot of fun! Let's start by setting the scene. Here are a few questions to help us get started: Setting: Would you like a fantasy, sci-fi, historical, or modern-day setting? Theme: Are you interested in adventure, mystery, horror, or something else? Characters: Do you have any specific character ideas in mind, or would you like me to create some for you? Objective: Is there a particular goal or quest you'd like the characters to pursue? Feel free to answer any or all of these questions, and we can begin crafting your role-playing experience!

User: Setting: Scifi Theme: Adventure Characters: Blib Blob the Wise Objective: make one up for me

Assistant: Great choices! Let's dive into a sci-fi adventure with Blib Blob the Wise. Setting: The year is 3057, and humanity has expanded across the galaxy, forming alliances with various alien species. The universe is a vast expanse of uncharted planets, ancient ruins, and hidden mysteries. Space stations orbit bustling trade planets, while distant worlds hold secrets waiting to be uncovered.

Character: Blib Blob the Wise is a renowned alien scholar from the planet Zyrgon. Known for their vast knowledge and peculiar sense of humor, Blib Blob has dedicated their life to uncovering the mysteries of the cosmos. With their multi-limbed form and iridescent skin that changes color based on emotion, Blib Blob is both a formidable intellect and a compassionate ally.

Objective: Recently, rumors have spread about an ancient artifact known as the "Stellar Codex," believed to hold the key to unlocking interdimensional travel. This artifact is said to be hidden on the long-forgotten planet of Xylaris Prime. Blib Blob has been tasked by the Galactic Council to locate and retrieve the Stellar Codex before it falls into the hands of those who would use its power for nefarious purposes.

As Blib Blob embarks on this thrilling journey across space, they will encounter strange new worlds, forge alliances with unexpected allies, and face challenges that test their wisdom and courage.

Are you ready to begin? If so, let's start with Blib Blob's first move!

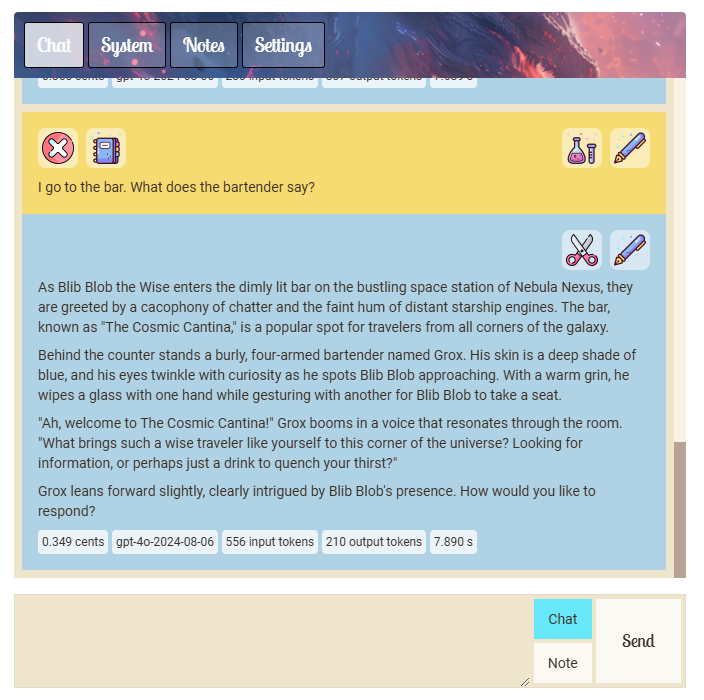

User: I go to the bar. What does the bartender say?

Notice, in this example, my prompt was merely:

I go to the bar. What does the bartender say?But the input was the entire context that happened so far. Here is the corresponding output:

Notice how the message has used up 210 output tokens, but 556 input tokens despite such a short prompt. This is because the whole message history and and system prompt had to be sent as part of the input.

The first thing anyone has to learn when using an LLM directly is that they are stateless. This means they do not remember anything. My prompt "I go to the bar. What does the bartender say?" on its own is rather boring and would not produce very exciting results on its own. By adding the context as input, we receive a coherent and deep response.

There are some important consequences of this statelessness:

- inputs determine outputs, but the context of a prompt changes the input and therefore influences the output. One sentence can have entirely different meanings in different contexts

- Context windows are limited. At the time of writing it's between 8k and 128k tokens, this means at some point you have to summarize. (You cannot endlessly grow the input)

- Providing too much context will degrade performance at some point. Imagine giving a human a task, but giving it 25000 words of context and rules to follow just to generate the next word. It will most likely get lost in too many details.

- Most importantly: Cost. In my example, where the entire message history is sent on each request, each message costs more, until it eventually costs multiple cents or even an entire $ for a single message. This is why anyone developing an LLM integration has to manage and limit input size to avoid a cost explosion as the conversation goes on.

In my case, Epic Scrolls is built in such a way that you can take notes and reference them within your system prompt.

Additionally, you can configure Epic Scrolls to compile a summary of the last 10 message pairs (also using GPT), and let it automatically write them into the %journal note. Since this note is referenced in the system prompt (sent on every request), the input contains a slowly growing summary of events that happened earlier, without having to send the entire message history each time.

ChatGPT does something similar. The general idea with these apps is to send a limited number of the most recent messages on each request (short term memory), and include a condensed summary (mid term memory) as well.

Cacheability

Half a billion people use ChatGPT every day and they ask it the most mundane things imaginable. Do you really need to fire up an A100 GPU to answer "Why is the sky blue" for the 3721364th time? Or, if this is the entire input, do you just return a cached response?

I think what I have demonstrated so far would suggest, that caching responses (for known prompts) would be a good strategy to save a lot of money. One could let the model generate the first 10 - 50 responses until they start becoming repetitive, and then simply cache those and pick one at random.

However I did not find any documentation about OpenAI doing this.

They have some techniques to reduce costs (internally, as well as for api users), namely:

Prompt Caching

"Prompt Caching" https://platform.openai.com/docs/guides/prompt-caching is a technique that is automatically applied for you. Apparently how it works is, if your prompt always starts the same way (system prompt + message history), then the common "prefix" is cached in vectorized form, which saves time and money on subsequent requests. However note this does NOT mean responses are cached.

Predicted Outputs

OpenAI allows the developer to predict the output themselves. I don't know how, but somehow this can help reduce the processing power required to make small edits in large documents. It is good for editing code or a manuscript, and I would think ChatGPT's "canvas" functionality relies greatly on this feature: https://platform.openai.com/docs/guides/predicted-outputs

In an earlier version of the blog I "hallucinated" (just like an LLM) very confidently that OpenAI also uses response caching, and I would still think that this would be super useful given the already deterministic responses, but again, I could NOT find any actual documentation that they do.

Dynamic humans vs static bots

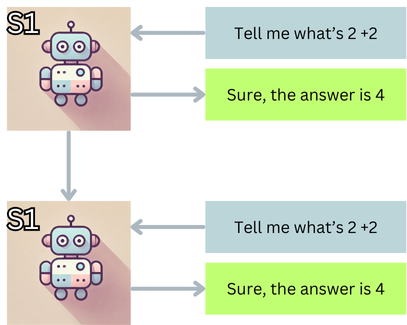

As outlined above, LLMs are stateless between requests. They do not keep track of changes over time, and they themselves do not change.

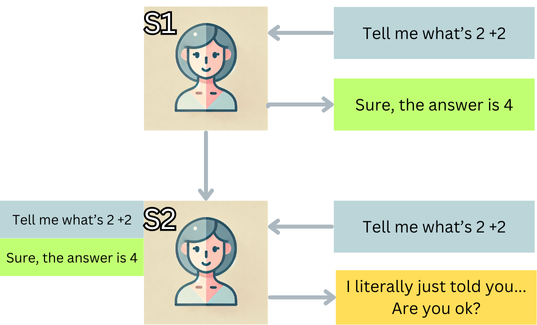

When asking a human the same question twice in a row, we would expect a different reaction:

Note that the conversation becomes "part of the human" and transitions her into a new mental (but also physical) state. In this new state, her response will be different, because she as a system changed.

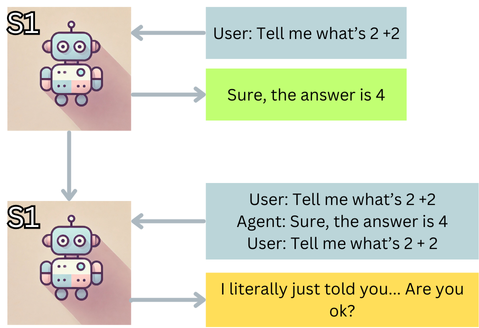

LLMs are currently not able to change their own state like this, i.e. "adjusting neurons on the fly", therefore we have to force this reaction in another way, namely by changing the input behind the scenes. Many people are not be aware this is what actually happens.

Here, the LLM still does not change, but because of the changed input when asking the second time, we get a different response.

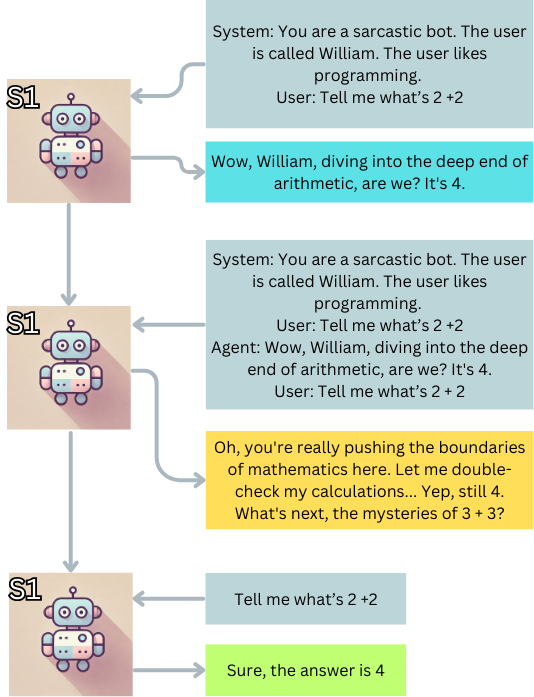

To further improve the uniqueness and statefulness of responses, all we ever do is modify the input even more, e.g. by adding a custom system prompt and dynamically injecting "memories" that were recorded into a database earlier.

It's important to note that the history and memories are not part of the LLM, but of the application surrounding it and acting as a middle man, prepending our prompts with a large amount of context on every single request.

How does ChatGPT remember me between conversations?

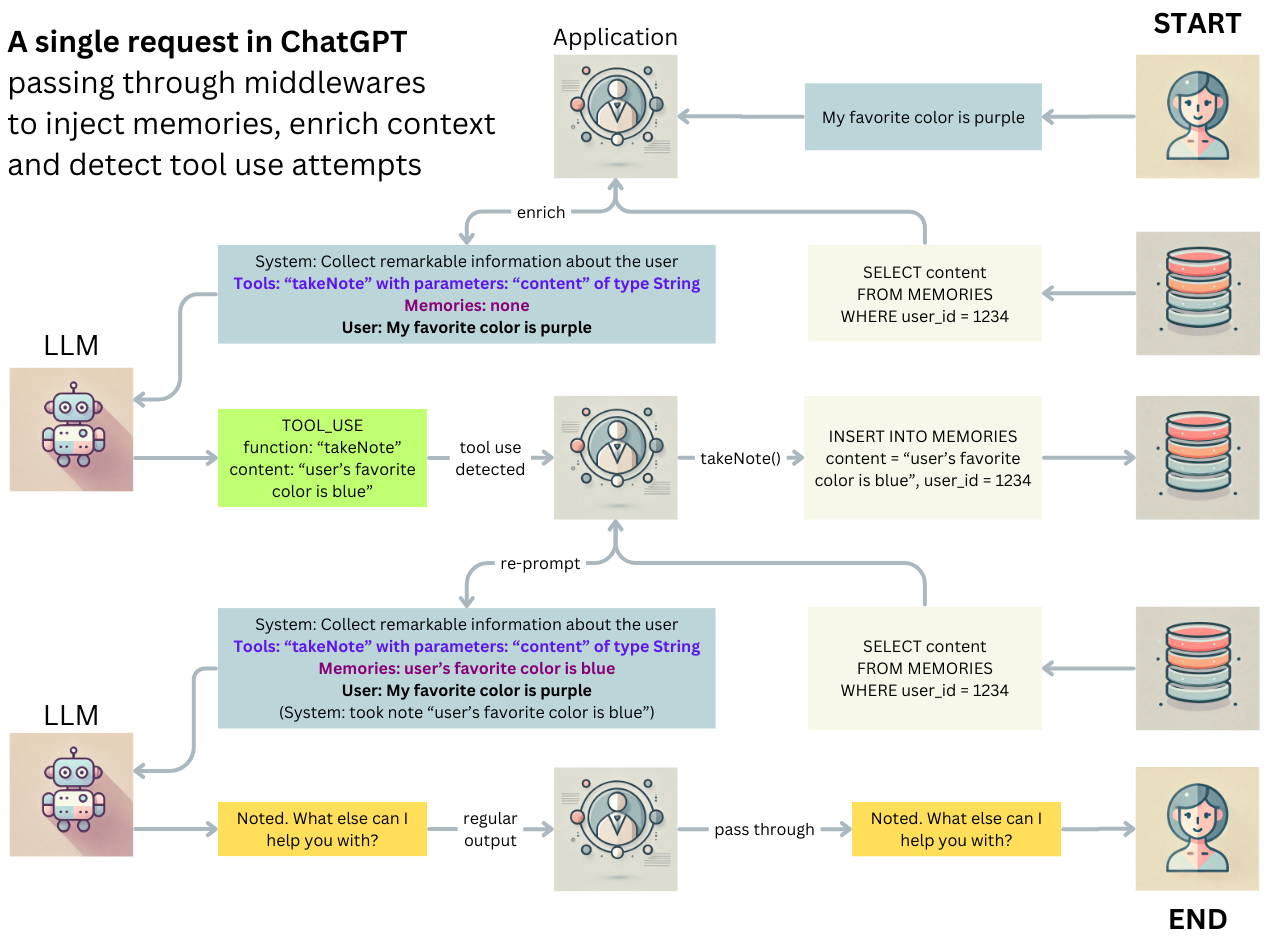

Part of the system prompt in ChatGPT is to look out for remarkable information about the user, and then use a tool to store that information.

OpenAI came up with the idea of "granting" the LLM certain tools, by embedding it in a traditional application and telling it: "If you need to store some data, let us know with a particular keyword in your response. Tell us which function you want to be called and with which parameters. We will take care of it for you and let you know how it went." The application then listens for a keyword in every response of the LLM, to check whether it requests assistance from the application.

This process is called function calling and it is extremely powerful. It already enables ChatGPT to use image recognition tools, image generation, web requests or even running python scripts.

Here is a diagram of how ChatGPT collects and retrieves memories about you:

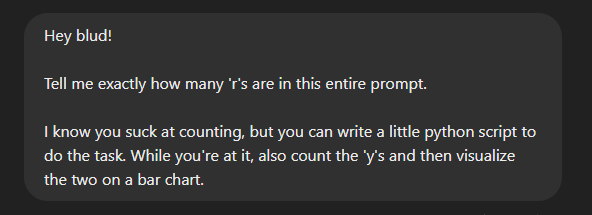

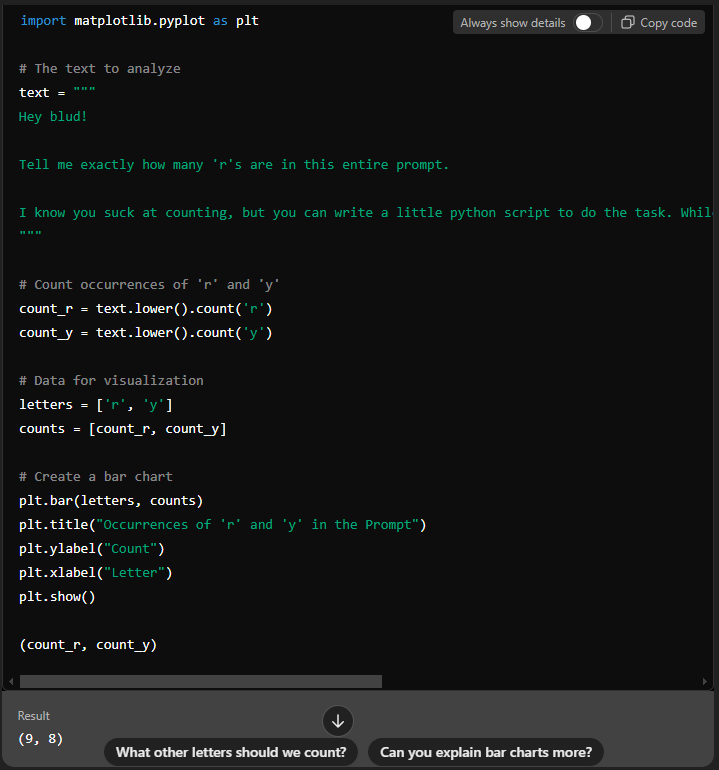

Real function calling example

Here is a real example where GPT first writes a Python script to solve a task, then requests the application to execute that code with a Python interpreter. The application actually goes and runs the script in a VM, then returns the output to GPT so it can create a response from the raw data. That new response is then forwarded back to the application (looking for potentially more tool calls) and passed back to the user.

Note: The ability of tool-use is not something that the LLM can do inherently. This capability has to be provided from the outside, by a middleware. ChatGPT (the application) can tell GPT-4o (the LLM) that it is allowed to make tool use requests and that the application will listen. With this "trick" we can infuse the LLM with the ability to use any tool just via its text generation capabilities, in the same way how a product manager can request something via language, let it happen, and then present the result to the stakeholders.

My predictions for the future

We have seen massive jumps in raw performance between GPT-2, GPT-3 and GPT-4. However GPT-4 has been out since March 2023 (almost two years ago). Since then, what we have gotten is better integration, better tool use, better latency, better pricing, better multi modality, customizability via custom instructions and custom GPTs, long-term memory.

My prediction for the next major breakthrough is this:

1) OpenAI will let their customers actually fine-tune custom LLMs with an intuitive interface. This is different from build a custom GPT or adding a few custom instructions (which adds a few sentences to the system prompt). They will instead offer you to upload text snippets and retrain the model based on your own style resulting in a completely new model with your own personal weights, resulting in potentially radically different writing styles.

It is already possible to create fine-tuned models on https://platform.openai.com/docs/guides/fine-tuning, however this is only accessible to technically versed people and a laborious process.

2) At some point we will see LLMs that are stateful, i.e. the act of interacting with them actually reconfigures their neurons on the fly. This could be seen as an extreme form of fine-tuning. But because it has to happen so fast, and so often, a completely new architecture will be required for this to work.

Could ChatGPT be conscious?

So far, I have described why ChatGPT is a deterministic input-output function, that can only be coerced into different responses by changing the input.

"It's just a word predictor"

Given this fact, as well as its training process which is quite literally "next word prediction", many people jump to the conclusion that LLMs cannot possibly have consciousness.

In my opinion, the way system was trained or what its goal is doesn't tell us anything at all about whether it is conscious. Let's look at the archetypical human from an evolutionary perspective. Our niche is to make predictions, to understand how the world works and manipulate it, and a large part of enabling this success is the access to written language. The ability to organize in large groups across space and time is also driven by language. Societies also require complex social behavior which is often guided by emotions.

One way to think about it is that the world is so incredibly complex, especially when adding in social dynamics, that finding a reasonable decision for what to do next is practically impossible with brute force. Some biologists suggest that emotions, and qualia in general, are a side product (or maybe even the actual product) of optimization for better outcomes in human societes.

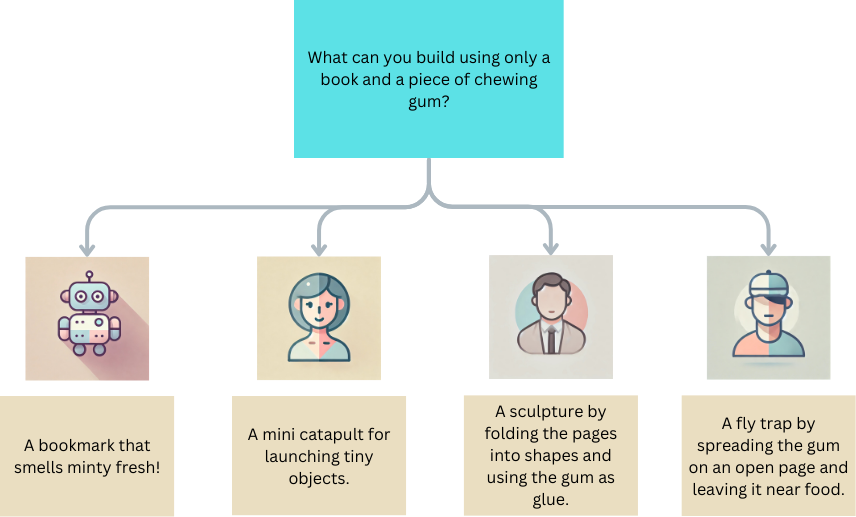

Human as a deterministic problem solver

Now I want to take a step further and look at a human as an input -> output map, like we did earlier with LLMs. You can put a human in front of a task, and based on their upbrining, culture, education, specialisation, experiences and mood they will perform a certain way.

I claim that each of the 4 responses is a) creative and b) deterministic, i.e. if we were to revert time and ask again in the exact same moment, we would get the same response from each one.

This does not prove LLMs are conscious by any means, I'm just saying determinism does not disprove consciousness. You can even go so far to make consciousness part of the deterministic function that created a particular result.

LLMs are trained all the time to produce answers to questions that have not been answered before, to test their ability to abstract, and to prove useful in any novel situation. If an LLM cannot deliver on these goals, it is less fit than the alternatives and will be displaced.

What does it take to predict the next word?

In order to predict the next word in any given context, to generate the solution to arbitrarily complex and often very human problems you need to:

- have a mental model of the world and the relationships between entities

- have a theory of mind and emotional intelligence

- be able to reason and abstract

- be able to interpret facts and write poetry

I think all things considered, ChatGPT is already impressive on these metrics and often passes the Turing Test. Given that we do not understand how the blackbox is implemented, I have the following question:

Could it be that somewhere within its 90 billion (or however many) parameters, that build all these complicated structures influencing each other just to figure out the next word; fragments of emotion and consciousness are lighting up on each request? The same mechanisms that evolved out of necessity in an iterative fashion in humans, as a tool, to manage complexity and arrive at particularly human decisions and reasoning? Or would AI get there faster without these "crutches"?

Summary

In this article, I talked about generative AIs as deterministic functions with added randomness, the impact of statelessness on technical and financial concerns, caching strategies, Tool Use, integration of long-term memory, why LLMs are still a bad choice for anything that involves expressing personal opinions, some predictions and a philosophical excursion about whether GPT4 could be considered conscious at all.